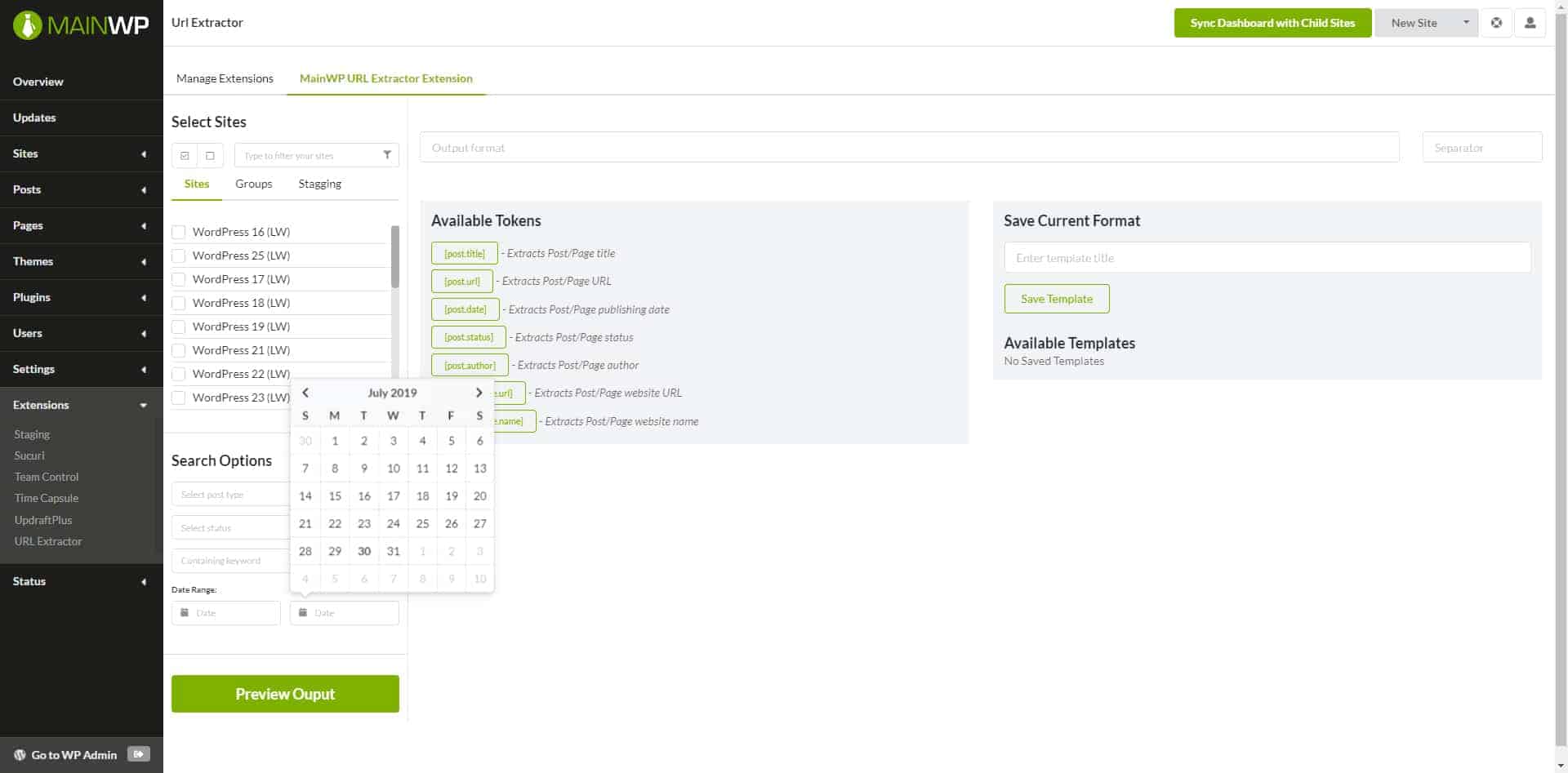

Article url extractor plus#

On the left-hand sidebar, rename the selection with the headline. They will now get highlighted with green color. Then click on the second one given on the page and choose them. The rest headlines on a page would get highlighted in yellow color.This will be underlined in green and indicate that this has been chosen. Begin by clicking the title about the initial news article available on a page.3i Data Scraping will render the site within the app. Provide the URL that you wish to extract and we would submit Newsweek URL that we have chosen. Open 3i Data Scraping scraper and click on the “New Project”.

Article url extractor install#

Scraping News Article DataĮnsure to download as well as install 3i Data Scraping scraper before you start. In this example, we will extract the news feeds pages from the Newsweek website. Then, the scraper will automate the procedure and extract data into the excel spreadsheet. You can download and install it now.Ī web data scraper will permit you to extract website data you want to scrape as well as click on data that you wish to extract.

Article url extractor free#

Easy and Free Web Scrapingįor the given project, we will utilize a 3i Data Scraping scraper, a powerful data scraper, which can scrape data from all websites. Using the web data scraper makes that an easy job to complete. Therefore, you might need to extract data from news websites and scrape it into the excel spreadsheet to do more analysis. This type of data could be used to do financial analysis, sentiment analysis, much more. lynx -listonly -dump websites have a lot of important data. Lynx a text based browser is perhaps the simplest. Running the tool locallyĮxtracting links from a page can be done with a number of open source command line tools. The API is simple to use and aims to be a quick reference tool like all our IP Tools there is a limit of 100 queries per day or you can increase the daily quota with a Membership. Rather than using the above form you can make a direct link to the following resource with the parameter of ?q set to the address you wish to extract links from. API for the Extract Links ToolĪnother option for accessing the extract links tool is to use the API. It was first developed around 1992 and is capable of using old school Internet protocols, including Gopher and WAIS, along with the more commonly known HTTP, HTTPS, FTP, and NNTP. Being a text-based browser you will not be able to view graphics, however, it is a handy tool for reading text-based pages. Lynx can also be used for troubleshooting and testing web pages from the command line. This is a text-based web browser popular on Linux based operating systems. The tool has been built with a simple and well-known command line tool Lynx. From Internet research, web page development to security assessments, and web page testing. Reasons for using a tool such as this are wide-ranging. Listing links, domains, and resources that a page links to tell you a lot about the page. This tool allows a fast and easy way to scrape links from a web page. No Links Found About the Page Links Scraping Tool

0 kommentar(er)

0 kommentar(er)